评估

¥Evaluation

评估是评估 LLM 应用性能和有效性的过程。它涉及根据一组预定义的标准或基准测试模型的响应,以确保其符合所需的质量标准并实现预期目的。此过程对于构建可靠的应用至关重要。

¥Evaluation is the process of assessing the performance and effectiveness of your LLM-powered applications. It involves testing the model's responses against a set of predefined criteria or benchmarks to ensure it meets the desired quality standards and fulfills the intended purpose. This process is vital for building reliable applications.

LangSmith 通过以下几种方式帮助完成此过程:

¥LangSmith helps with this process in a few ways:

通过其追踪和注释功能,你可以更轻松地创建和管理数据集。

¥It makes it easier to create and curate datasets via its tracing and annotation features

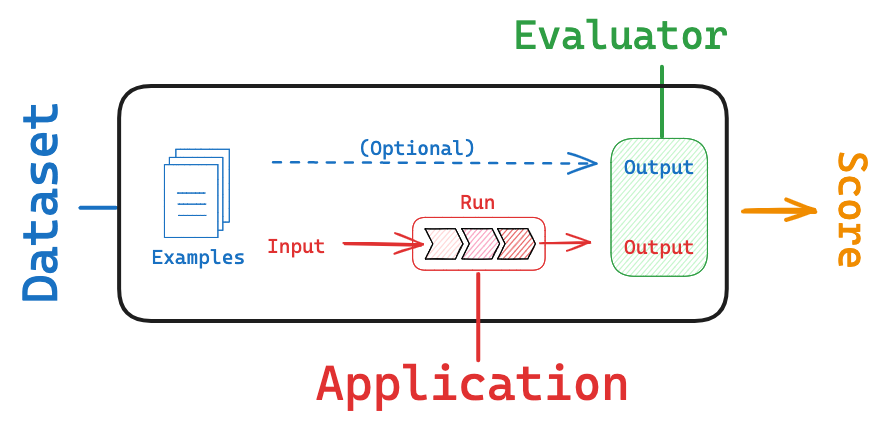

它提供了一个评估框架,可帮助你定义指标并针对数据集运行应用。

¥It provides an evaluation framework that helps you define metrics and run your app against your dataset

它允许你跟踪一段时间内的结果,并自动按计划或作为 CI/Code 的一部分运行你的评估器。

¥It allows you to track results over time and automatically run your evaluators on a schedule or as part of CI/Code

要了解更多信息,请查看 此 LangSmith 指南。

¥To learn more, check out this LangSmith guide.